Qwen, Alibaba’s AI research division, has officially unveiled the Qwen3-Coder-480B-A35B-Instruct model, marking a significant leap in open-source coding AI. This model features an impressive 480 billion-parameter Mixture-of-Experts architecture and stands as the most powerful autonomous coding model developed by the Qwen team to date.

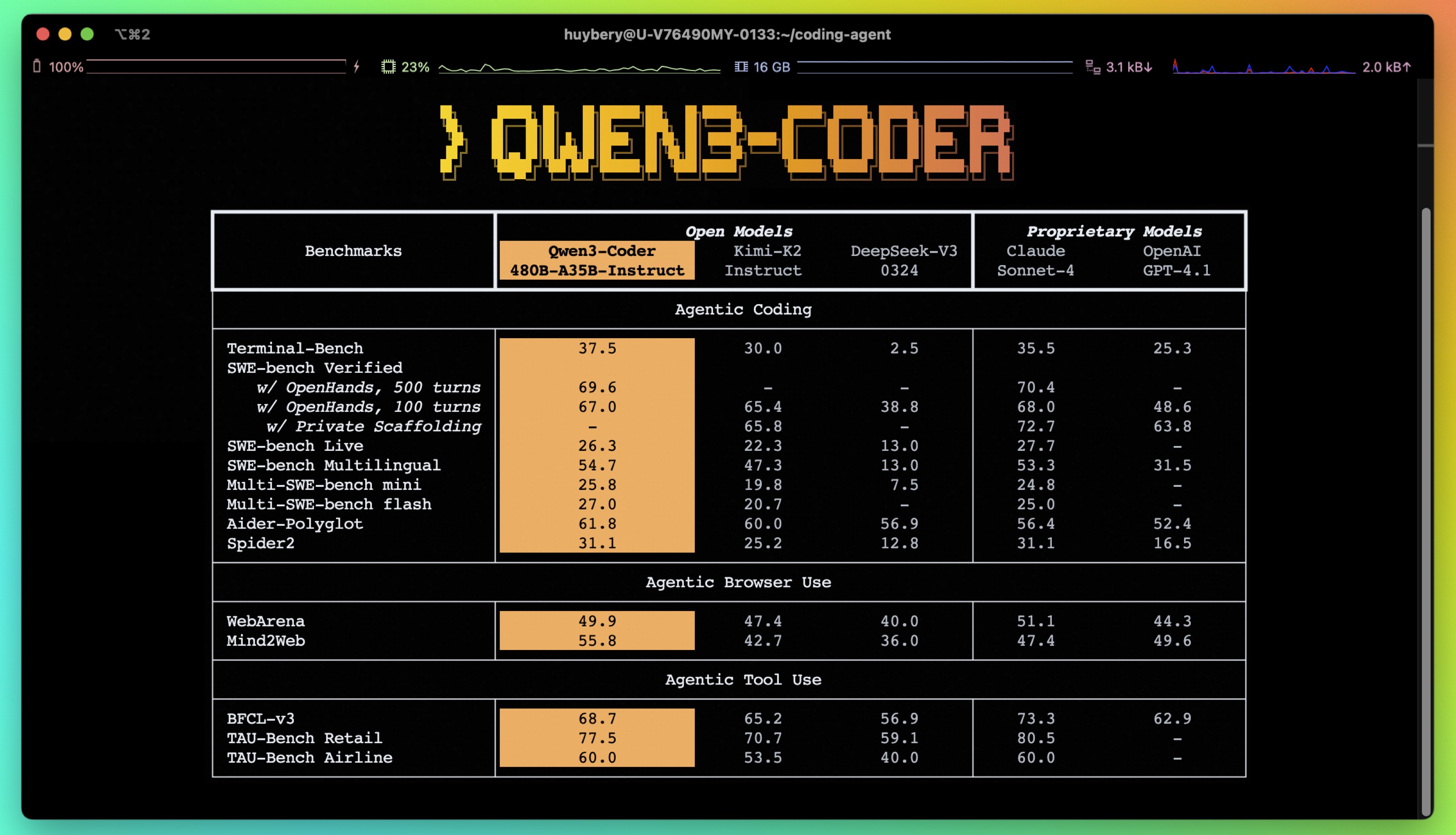

Qwen3-Coder-480B-A35B-Instruct sets a new benchmark among open models for autonomic coding, browser integration, and tool use, delivering performance comparable to top players like GPT-4.1 and Claude Sonnet 4. In testing, the model achieved a remarkable 61.8% on the Aider-Polyglot benchmark, offers a context window of up to 256,000 tokens, and is scalable up to 1 million tokens.

A key strength of the model lies in its adaptability across multiple platforms and optimized compatibility with various hardware configurations. Developers can deploy Qwen3-Coder locally using tools like Ollama, LMStudio, MLX-LM, or llama.cpp, while the API is fully compatible with OpenAI standards, ensuring rapid integration for testing and production scenarios.

Beyond code generation, Qwen3-Coder-480B-A35B-Instruct features advanced “agentic” capabilities such as tool calling and triggering functions autonomously. Benchmark tests, including SWE-bench, WebArena, and BFCL-v3, show that its performance is on par with industry leaders.

The release package includes thorough documentation, recommended parameter configurations, and sample code for practical use. The model enables efficient code generation for outputs as long as 65,000 tokens and can be fine-tuned for specific applications. All resources, technical details, and scientific reports are available on Qwen’s official GitHub page.

This breakthrough is seen as a major stride in the evolution of open-source AI-powered coding, positioning Qwen3-Coder as a vital resource for developers worldwide.