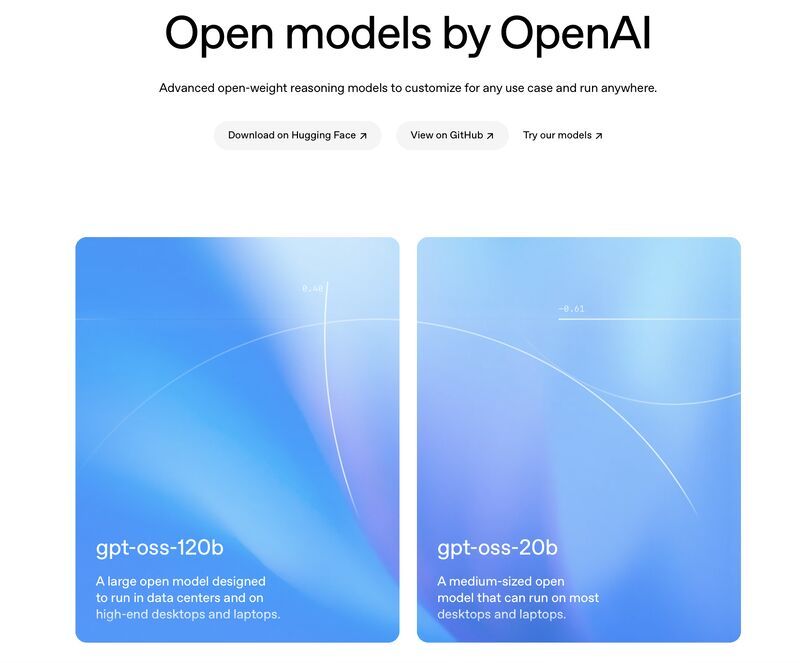

OpenAI has introduced two new open-weight models—GPT-OSS-120B and GPT-OSS-20B—marking its first open-weight release since GPT-2 and signaling a strategic shift. Both models are available for free under the Apache 2.0 license on Hugging Face, with reference runtimes for PyTorch and Apple Metal and pre-quantized MXFP4 options.

Built on a Transformer-based mixture-of-experts (MoE) design, the models activate only the relevant experts per input, reducing active parameters and improving cost-efficiency. They support up to a 128K-token context window, and integrate RoPE positional embeddings, multi-query attention, and sparse attention to improve memory and compute efficiency.

On hardware, GPT-OSS-120B can run on a single GPU with 80 GB memory, while GPT-OSS-20B requires just 16 GB, making it suitable for edge and widely available devices. Microsoft is optimizing the 20B model for Windows via ONNX Runtime. Deployment is supported across platforms including Hugging Face, Azure, AWS, Databricks, Vercel, and Cloudflare, with hardware optimizations for NVIDIA, AMD, Cerebras, and Groq.

Performance-wise, OpenAI reports strong results versus o3, o3-mini, and o4-mini across benchmarks such as Codeforces (code), MMLU/HLE (general knowledge), AIME 2024–2025 (math), HealthBench (medical), and TauBench (tool/function calling). GPT-OSS-120B performs close to or above o4-mini in many tests, while the smaller 20B surpasses o3-mini across several evaluations.

Safety measures include CBRN filtering during pretraining and additional post-training safeguards to resist harmful requests. Adversarial stress tests used non-refusing variants tailored to domains like biology and cybersecurity; these showed limited capabilities and were audited by independent experts. Chain-of-thought capabilities were developed using unsupervised methods to enable external traceability; OpenAI advises developers not to expose these traces to end users. To further strengthen community oversight, OpenAI launched a red teaming competition with a total prize pool of $500,000.

Overall, GPT-OSS-120B and GPT-OSS-20B target a broad spectrum from low-cost local setups to production-scale enterprise deployments. With open licensing, optimized distribution paths, and competitive benchmarks, OpenAI is making a notable return to the open ecosystem.