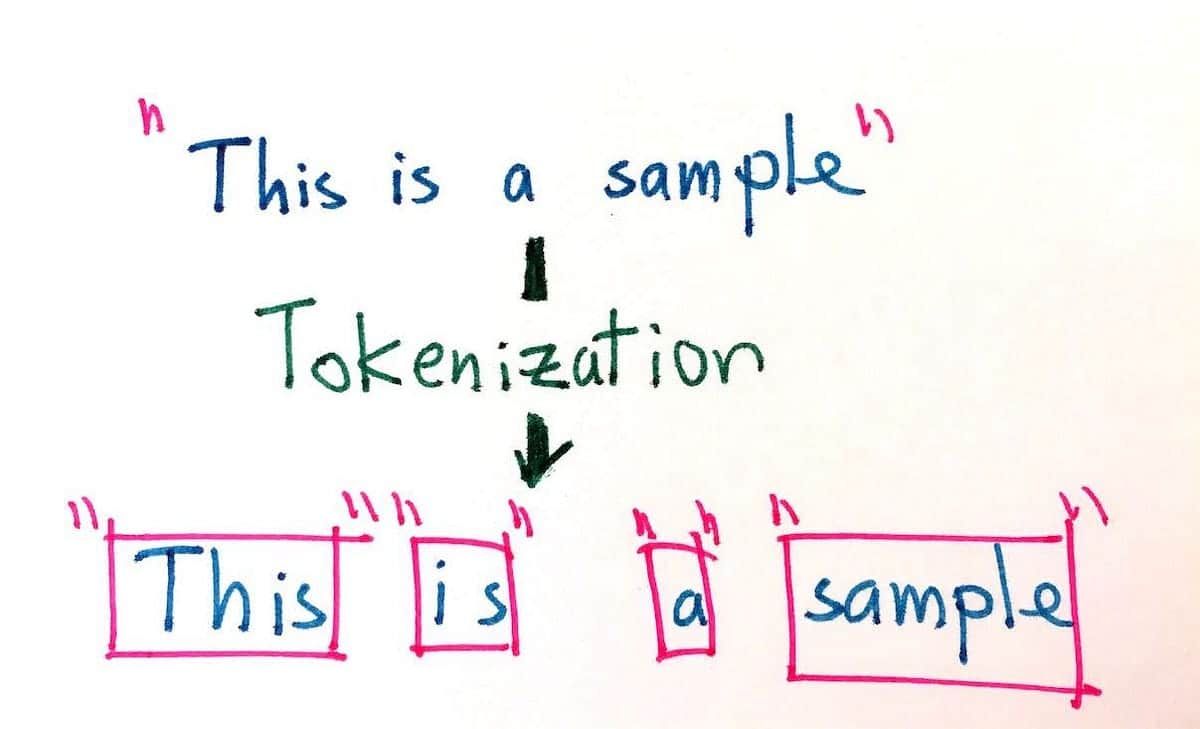

Tokenization is a crucial step in Natural Language Processing (NLP), but for languages like Turkish, Hungarian, and Finnish, standard approaches often fail. A new study by researchers from Yıldız Technical University, Yeditepe University, University of Chicago, and Istanbul Bilgi University proposes an innovative evaluation framework to improve tokenization accuracy for such languages.

Using a 6,200-question dataset from the Massive Multitask Language Understanding (MMLU) benchmark, the study introduces five key metrics to measure tokenization effectiveness:

✅ Vocabulary size

✅ Token count

✅ Processing time

✅ Language-specific token percentage (%TR)

✅ Token purity (%Pure)

Unlike traditional approaches, %TR measures how many tokens are valid words in the target language, while %Pure evaluates whether tokens align with meaningful linguistic units. The results reveal that %TR correlates strongly with model performance, proving that larger models don’t always mean better tokenization.

This research sets a new standard for NLP, ensuring efficient, language-specific tokenization strategies that optimize AI models for complex linguistic structures. Future work will focus on morphological analysis improvements and expanding evaluations across different languages.

Source : https://arxiv.org/abs/2502.07057