OpenAI unveils GPT-5: a hybrid that chooses when to think

On August 7, 2025, OpenAI announced GPT-5, a unified system that routes between a fast responder and a deeper “thinking” model in real time. The system’s router selects the appropriate submodel according to conversation complexity, tool needs and explicit user intent; mini/nano fallbacks manage load when limits are reached.

Benchmark gains and reasoning improvements

OpenAI reports meaningful improvements on academic and practical benchmarks — math (AIME), software engineering benchmarks, multimodal understanding and health evaluations — and highlights that GPT-5’s “thinking” mode substantially reduces hallucinations and deceptive assertions in complex, open-ended queries. The company also claims that when using reasoning, GPT-5 achieves more accurate, honest responses compared with prior models.

API features and token economics

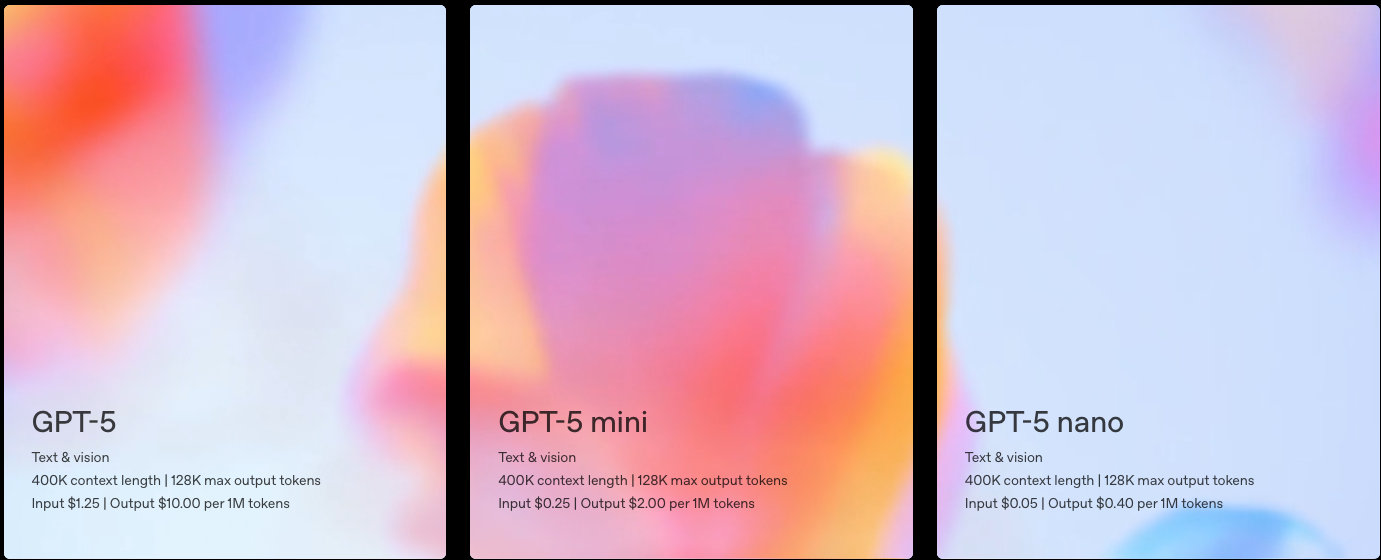

The GPT-5 family is exposed as regular, mini and nano models; API options include different reasoning-effort levels and visible reasoning summaries. Input token limits are very large (≈272k tokens) and output windows similarly wide, enabling long conversational or document contexts. Pricing is competitive, with per-token rates that undercut some previous OpenAI tiers and notable short-term token-cache discounts that reduce input costs for active conversations.

Safety tradeoffs: progress but not solved

OpenAI introduces training and post-training changes aimed at reducing sycophancy and hallucination and proposes “safe-completions” as a more nuanced approach than binary refusals. Third-party assessments show improved resilience to prompt-injection attacks relative to older models but not elimination — prompt-injection remains an unsolved operational risk that developers must mitigate.

What this means

For product teams, GPT-5’s combination of stronger reasoning, lower marginal token costs and big context windows will likely accelerate richer conversational UIs and more ambitious multi-step agents. For regulated domains (health, legal, finance), improvements matter — but so does conservative deployment: human oversight, tool-enabled verification and conservative safety policies will remain essential.