Apple continues its rapid advancements in artificial intelligence with the announcement of FastVLM, a new generation Vision Language Model (VLM) designed to overcome efficiency challenges, particularly when working with high-resolution images. Introduced at the CVPR 2025 conference, FastVLM addresses the critical "accuracy-latency" trade-off faced by traditional VLMs through an innovative approach.

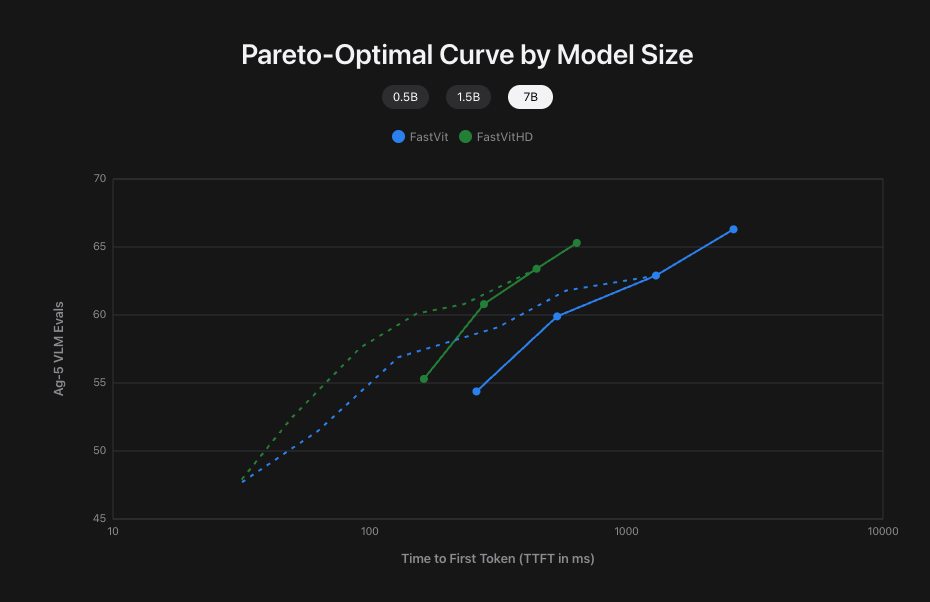

At the core of FastVLM lies FastViTHD, a hybrid visual encoder specifically engineered for high-resolution imagery. Traditionally, while increased image resolution boosts VLM accuracy, it simultaneously prolongs the processing time for the visual encoder and leads to the generation of more visual tokens, thereby increasing the LLM's pre-filling time. FastViTHD tackles this issue by employing techniques such as multi-scale pooling, additional self-attention layers, and downsampling, allowing it to produce fewer but higher-quality visual tokens. For instance, at a resolution of 336, it can generate 4 times fewer tokens than FastViT and 16 times fewer tokens than ViT-L/14.

This innovative architecture enables FastVLM to deliver significantly improved efficiency while maintaining high levels of accuracy. Comparisons indicate that when using the same 0.5B LLM, FastVLM achieves an 85x faster Time-to-First-Token (TTFT) and features a visual encoder that is 3.4 times smaller compared to LLaVa-OneVision. This superior performance makes FastVLM an ideal technology for applications demanding real-time interaction and on-device operation, such as anticipated smart glasses.

Alongside FastVLM, Apple has also released its source code and model weights on the Hugging Face platform, allowing researchers and developers to explore this technology more closely. Furthermore, an iOS/macOS demo application built on MLX has been published, demonstrating FastVLM's capability to run locally on mobile devices like the iPhone. These developments hold the potential to usher in a new era for on-device AI experiences.